Chapter 12 Model Fitting and Model Fit (OLS)

12.1 Least Squares Estimation and the Decomposition of Variance

The linear models in the last chapter and for much of this book are fit to data using a method called “ordinary least squares” (OLS). This chapter explores the meaning of OLS and related statistics, including , as well as some alternative methods for bivariate regression.

12.2 OLS regression

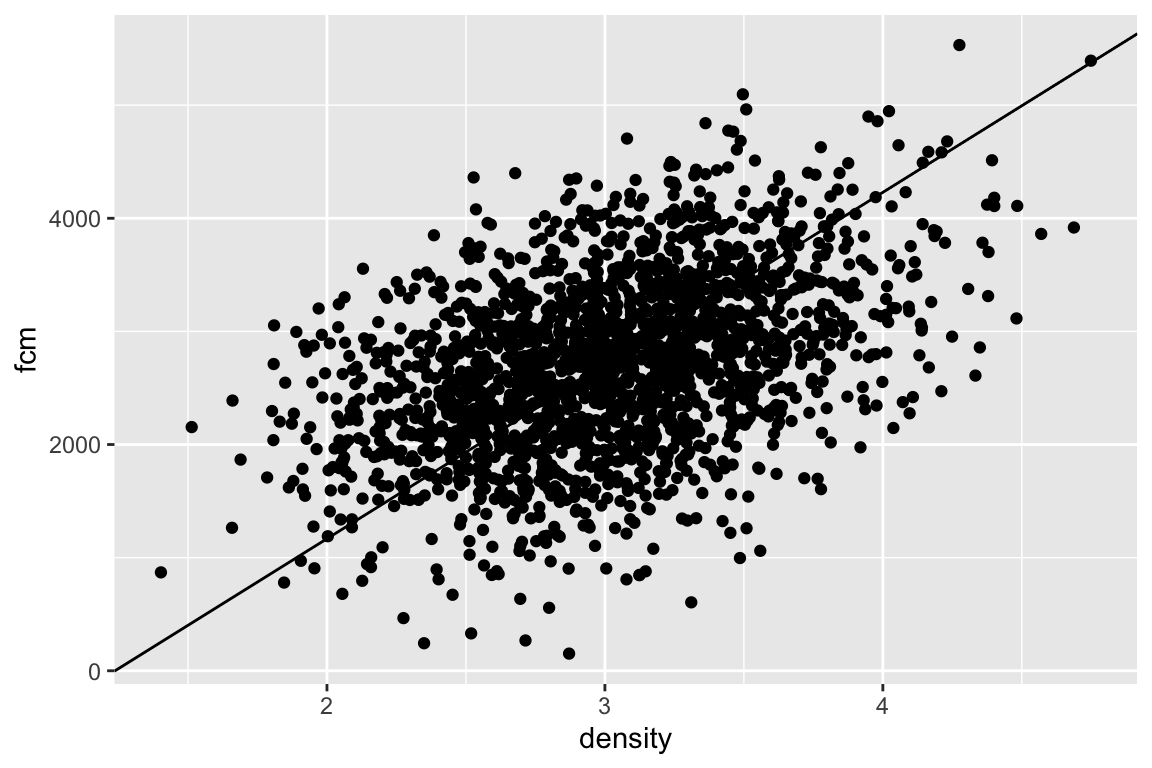

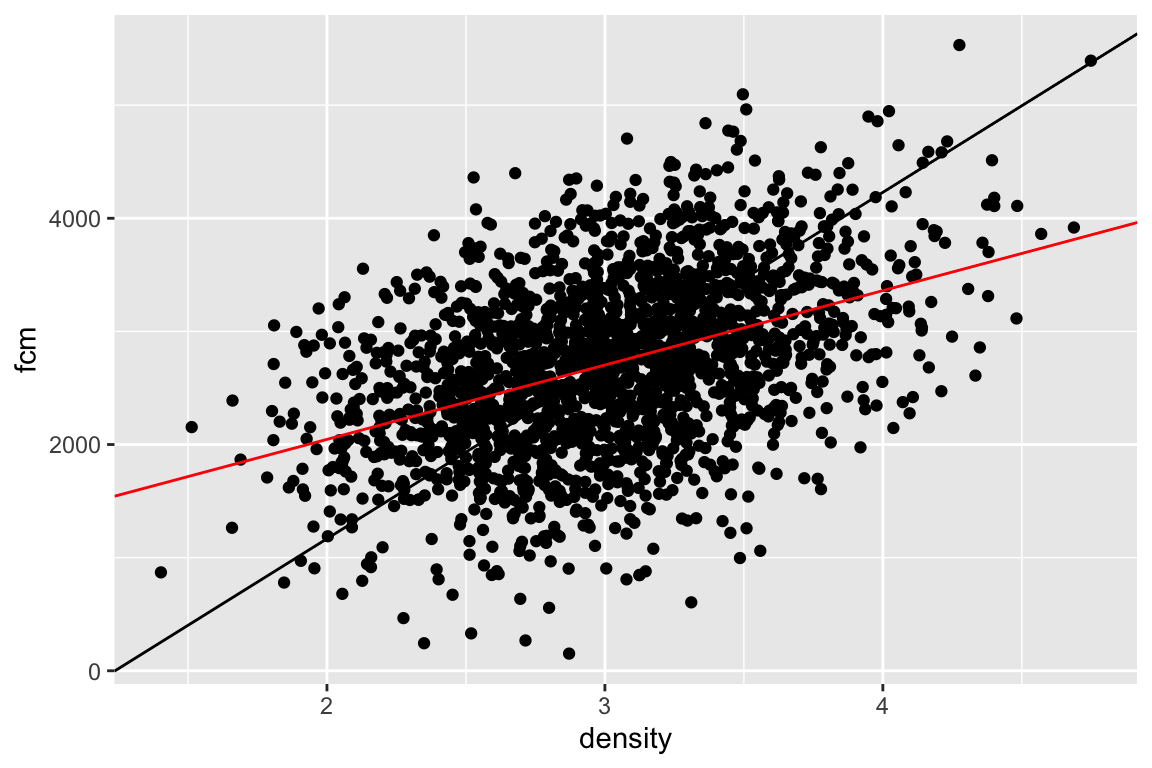

The fake data illustrated in the scatterplot above (Figure ??) were modeled to look something like the squirrel fecal cortisol metabolite data in the previous chapter. If a typical student is asked to draw a regression line through the scatter, they typically draw a line similar to that in Figure ??. This line is not the OLS regression line but the major axis of an elipse that encloses the scatter of points–that students invariably draw this line suggests that the brain interprets the major axis of an elliptical scatter of points as a trend (This major axis line is an alternative method for estimating a slope and is known as standard major-axis regression. More about this at the end of this chapter.)

The OLS regression line is the red line in Figure ?? – the standard major axis line is left for comparison). The OLS regression line

- passes through the bivariate mean (, ) of the scatter, and

- minimizes the sum of the squared deviations from each point to it’s modeled value

There are an infinite number of lines that pass through the bivariate mean (think of anchoring a line at the bivariate mean and spinning it). The OLS line is the line that minimizes the squared (vertical) deviations (“least squares”).

For a bivariate regression, the slope (coefficient of ) of the OLS model fit is computed by

This equation is worth memorizing. We will generalize this into a more flexible equation in a few chapters.

12.3 How well does the model fit the data? and “variance explained”

Let’s switch to real data.

- Source: Dryad Digital Repository. https://doi.org/10.5061/dryad.056r5

- File: “Diet-shift data.xls”

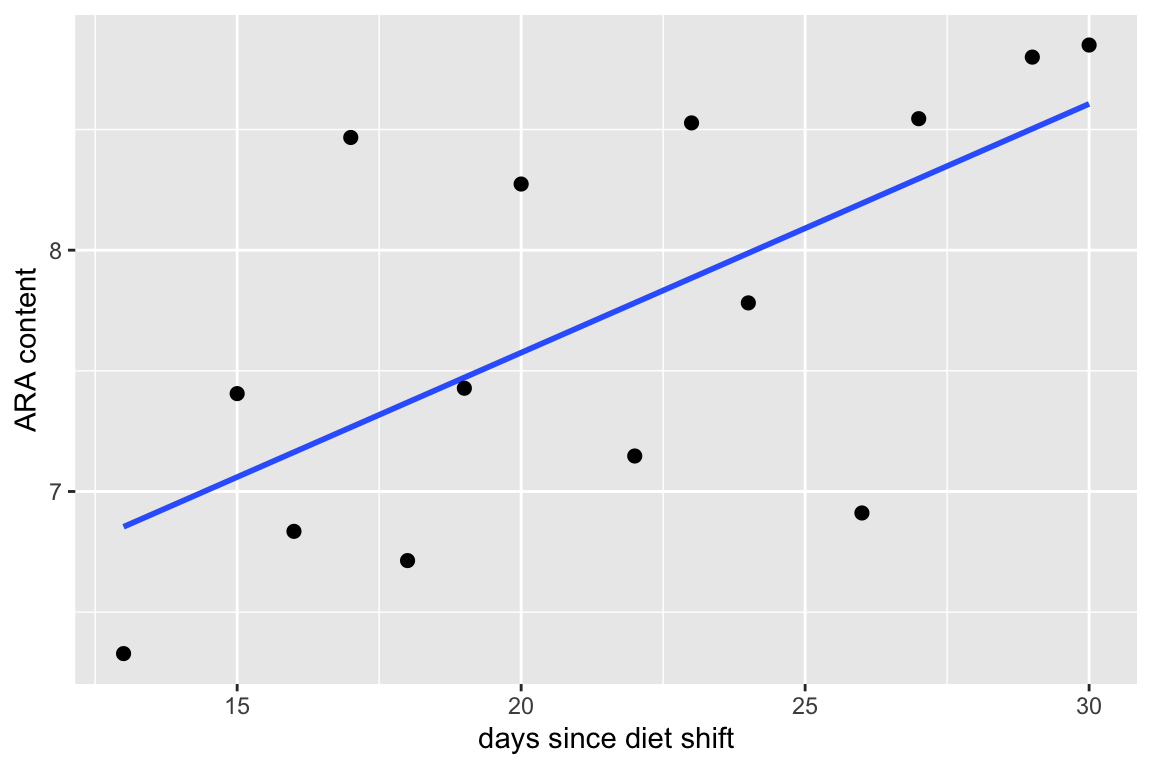

Fish require arachidonic acid (ARA) and other highyly unsaturated fatty acids in their diet and embryo and yolk-stage larvae obtain these from yolk. Fuiman and Faulk (xxx) designed an experiment to investigate if red drum (Sciaenops ocellatus) mothers provision the yolk with ARA from recent dietary intake or from stored sources in somatic tissues. The data below are from experiment 8. The x-axis is the days since a diet shift to more and less ARA () and the y-axis is the ARA content of the eggs ().

The statistic is a measure of the fit of a model to data. The for the fit of the egg data is 0.42. is the fraction of two variances , or, the fraction of the variance of “explained by the model.” The value of ranges from zero (the fit cannot be any worse) to one (the fit is “pefect”).

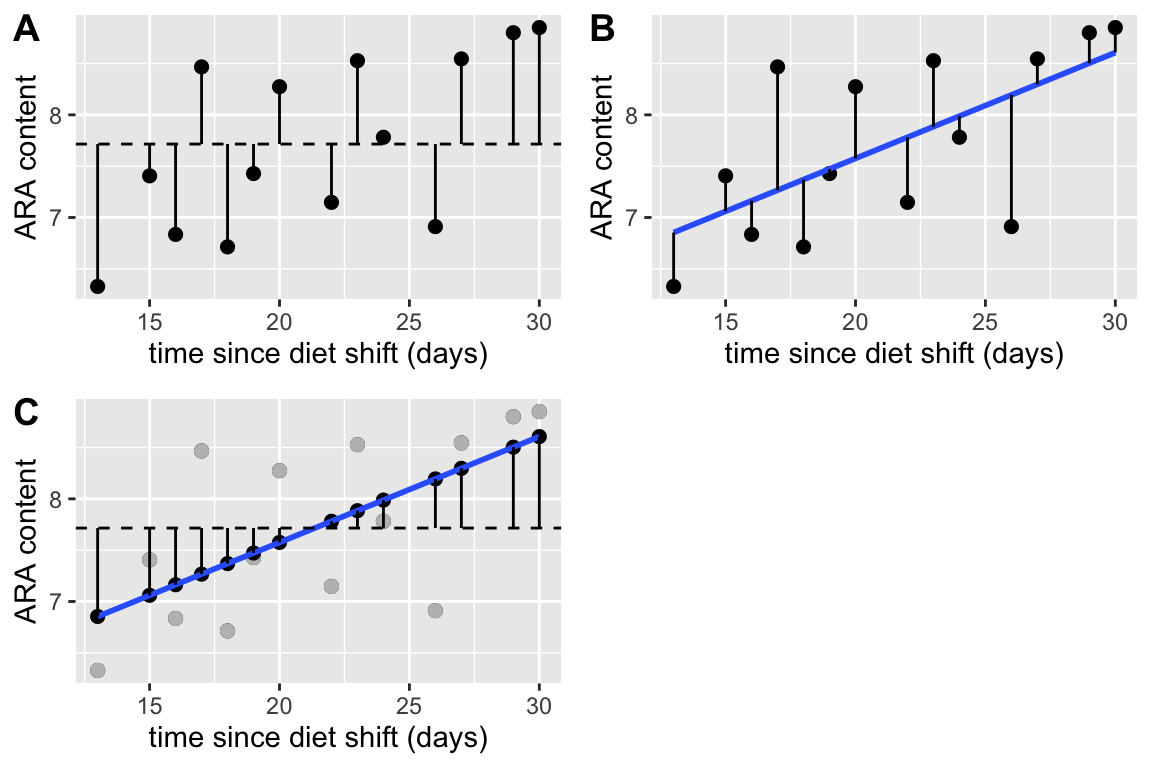

To understand , and its computation, a bit more, let’s look at three kinds of deviations.

Figure 12.1: Three kinds of deviations from a fit model. A. Deviations of the measured values from the mean. These are in the numerator of the equation of the sample variance. The dashed line is the mean ARA content. B. Deviations of the measured values from the modeled values. The sum of these deviations squared is what is minimized in an OLS fit. C. Deviations of the modeled values from the mean ARA content. The measured values are in gray, the modeled values in black

Figure 12.1A shows the deviations from the measured values to the mean value (dashed line). These are the deviations in the numerator of the equation to compute the variance of . Figure 12.1B shows the deviations of the measured values from the modeled values. The sum of these deviations squared is what is minimized by the OLS fit. The bigger these deviations are, the worse the model fit. Figure 12.1C shows the deviations of the modeled values to the mean value. The bigger these deviations are, the better the model fit.

The sums of the squares of these deviations (or “sums of squares”) have names:

Again, is the numerator of the equation for the sample variance. It is called “s-s-total” because . That is, the total sums of squares can be decomposed into two components: the modeled sums of squares and the error sums of squares. Given these components, it’s easy to understand

is the fraction of the total sums of squares that is due to (or “explained by”) the model sums of squares. Above I said that is the fraction of variance explained by the model. Equation xxx is a ratio of variance, but the in both the numerator and the denominator cancel out. Finally, many sources give the equation for as

which is an obvious alternative given the decomposition. I prefer the former equation because it emphasizes the model fit instead of model ill-fit.